In this post I’m going to differentiate between:

- Measures of things

- Targets on (measures of things)

- Measures of (targets on (measures of things)); and

- Targets on (measures of (targets on (measures of things)))

Wow, that last one is hard to write, let alone say out loud! You might think that it’s a nonsense (which it is) but, sadly, it’s very common.

Note: I added the brackets to (hopefully) make really clear how each one builds on the last.

I’ll attempt to explain…

1. Measures of things:

Seems straight forward enough: I’m interested in better understanding a thing, so I’d like to measure it1.

Some examples…

A couple of personal ones:

- What’s my (systolic) blood pressure level? or

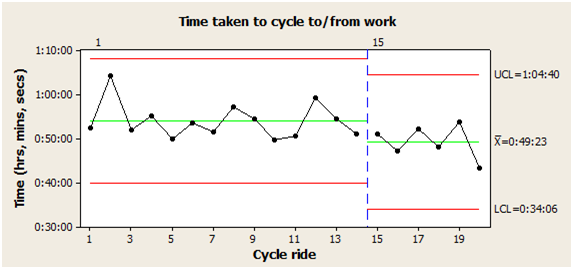

- How quickly do I ride my regular cycle route?

A couple of (deliberately) generic work ones:

- how long does it take us to achieve a thing? or

- how many things did we achieve over a given period?

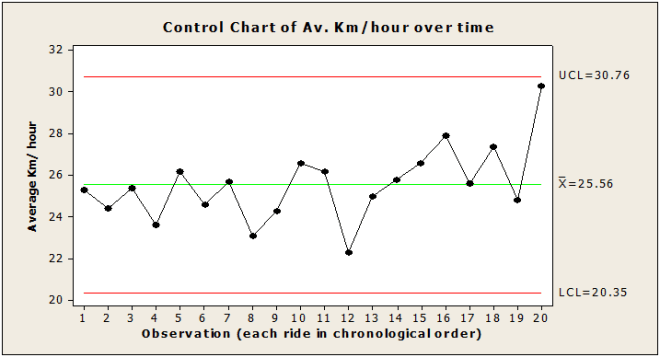

Here’s a graph of a measure of a thing (in chronological order):

Nice, we can clearly see what’s going on. We achieved 13 things in week 1. Each thing took us anything between 2 and 36 days to achieve…and there’s lots of variation in-between.

It doesn’t surprise me that it varies2 – it would be weird if all 13 things took, say, exactly 19 days (unless this had been structurally designed into the system). There will likely be all sorts of reasons for the variation.

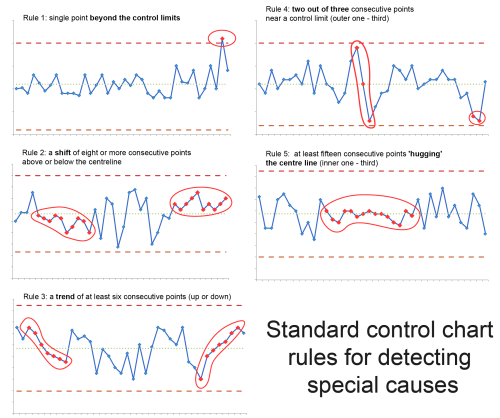

However, whilst I ‘get’ that there is (and always will be) variation, the graph allows us to think about the nature and degree of that variation: Does it vary more than we would expect/ can explain?3 Are there any repeating patterns? Unusual one-offs? (statistically relevant) Trends?

Such a review allows us to ask good questions, to investigate against and learn from.

“Every observation, numerical or otherwise, is subject to variation. Moreover, there is useful information in variation.” (Deming)

2. Targets on (measures of things):

Let’s say that we’ve been asked to achieve a certain (arbitrary4) target.

Here’s an arbitrary target of 30 days (the red line) set against our measure:

And here’s how we are doing against that target, with some visual ‘traffic lighting’ added:

| Instance (X) | 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 |

| Target of 30 days met? (Yes/No) | N | Y | Y | N | Y | Y | Y | Y | Y | Y | Y | N | Y |

We’ve now turned a rich analogue signal into a dull digital ‘on/off’ switch.

If we only look at whether we met the target or not (red vs. green), then we can no longer see the detail that allowed us to ask the good questions.

- We met ‘target’ for instances 2 and 3…but the measures for each were quite different

- Conversely, we met ‘target’ for instances 5 all the way through to 11 and then ‘suddenly’ we didn’t…which would likely make us think to intensely question instance 12 (and yet not see, let alone ponder, the variation between 5 and 11).

The target is causing us to ask the wrong questions5, and miss asking the right ones.

3. Measures of (targets on (measures of things)):

But I’m a fan of measures! So, let’s show a measure over time of how we are doing against our target.

In week 1 we met our 30-day target for 10 out of our 13 instances, which is 77%. Sounds pretty good!

Here’s a table showing how many times we met target for each of the next five weeks:

| Week | 1 | 2 | 3 | 4 | 5 |

| Things achieved | 13 | 15 | 14 | 11 | 12 |

| Number meeting 30-day target | 10 | 14 | 12 | 7 | 8 |

| % meeting 30-day target | 77% | 93% | 86% | 64% | 67% |

Let’s graph that:

It looks like we’ve created a useful graph, just like in point 1.

But we would be fooling ourselves – we are measuring the movement of the dumbed-down ‘yes/no’ digital switch, not the actual signal. The information has been stripped out.

For example: There might have been huge turbulence in our measure of things in, say, week 3 whilst there might have been very little variation in week 4 (with lots of things only just missing our arbitrary ‘target’)…we can’t see this but (if we want to understand) it would be important to know – we are blind but we think we can see.

4. Targets on (measures of (targets on (measures of things))):

And so, we get to the final iteration:

How about setting an arbitrary target on the proportion of things meeting our arbitrary target…such as achieving things in 30 days for 80% of the time (the red line)…

And here’s the table showing how we are doing against that target:

| Week number: | 1 | 2 | 3 | 4 | 5 |

| 80% Target on 30-day Target met? | N | Y | Y | N | N |

Which is a double-dumbing down!

We’ve now got absolutely no clue as to what is actually going on!!!

But (and this is much worse) we ‘think’ we are looking at important measures and (are asked to) conclude things from this.

The table (seemingly) tells us that we didn’t do well in week’s 1, 4 and 5, but we did in week’s 2 and 3…

The base data series used for this example:

In order to write this post, I used the Microsoft Excel random number generator function. I asked it to generate a set of (65) random numbers between 1 and 40 and then I broke these down into imaginary weeks. All the analysis above was on pure randomness.

Here’s what the individual values look like when graphed over time:

(Noting that instances 1 – 13 are as per the graph at point 1, albeit squashed together)

Some key points:

- There is nothing special about any of the individual data points

- The 30-day target has got nothing to do with the data

- There is nothing special about any of the five (made up) weeks within

- The 80% target on the 30-day target has got nothing to do with anything!

The point: Whilst I would want to throw away all the ‘targets’, ‘measures of target’ and ‘targets on measures of target’…I would like to understand the system and why it varies.

This is where our chance of improving the system is, NOT in the traditional measures.

Our reality:

You might be laughing at the above, and thinking how silly the journey is that I’ve taken you on…

…but, the ‘targets on (measures of (targets on (measures of things)))’ thing is real and all around us.

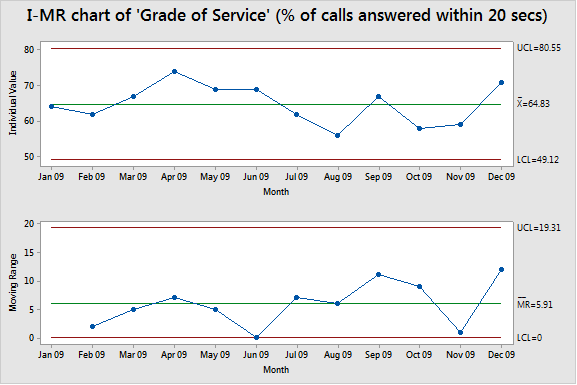

- 80% of calls answered within 20 seconds

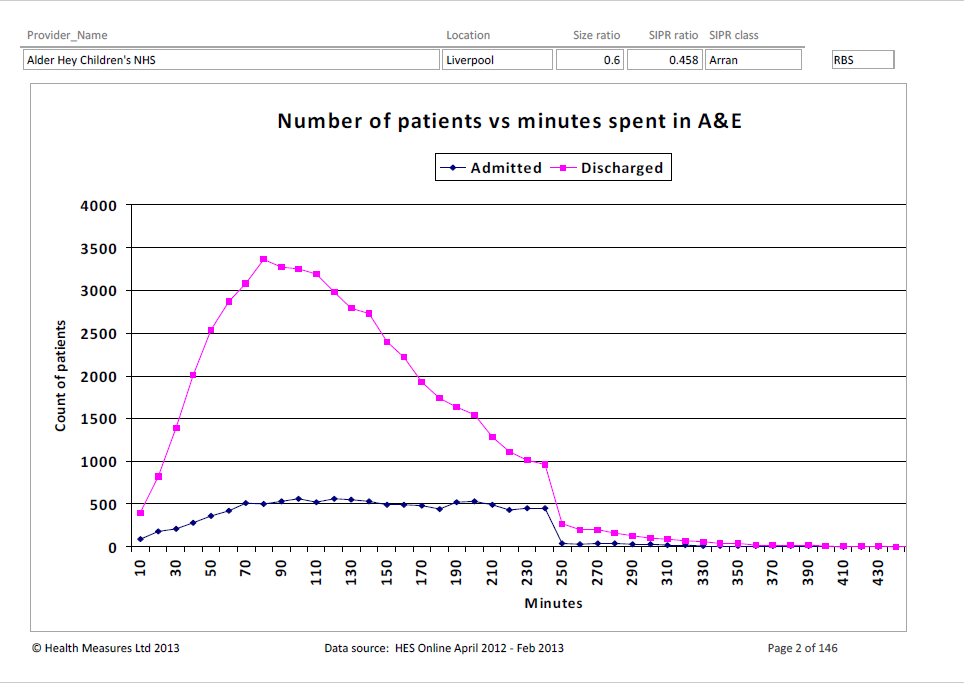

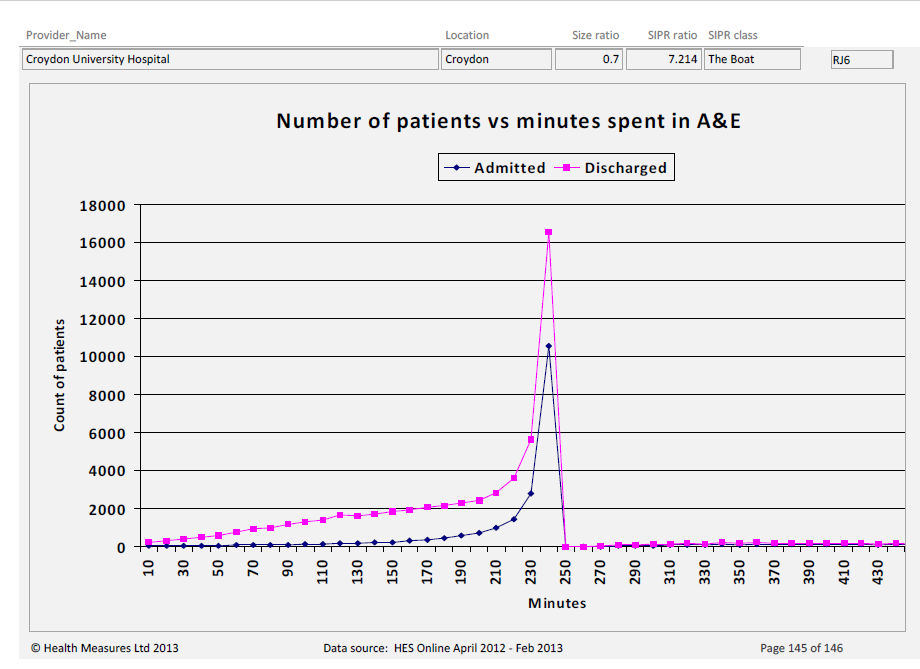

- 95% of patients discharged from the Emergency department within 4 hours

- 70% of files closed within a month

- [look for and add your own]

- …

Starting from a position of targets and working backwards:

If you’ve got a target and I take it away from you…

…but I still ask you “so tell me, how is [the thing] performing?” then what do you need to do to answer?

Well, you would now need to ponder how has the thing been performing – you would then need to look at a valid measure of a thing over time and ponder what this shows.

In a nutshell: If you’ve got a target, take it away BUT still ask yourself ”how are we doing?”

A likely challenge: “But it’s hard!”

Yes… if you peel back the layers of the ‘targets on targets’ onion so that you get back to the core of what’s actually going on, then you could be faced with lots of data.

I see the (incorrect) target approach as trying to simplify what is being looked at so that it looks easy to deal with. But, in making it look ‘easy to deal with’, we mustn’t destroy the value within the data.

“Everything should be made as simple as possible, but no simpler.” (attributed to Einstein)

The right approach, when faced with a great deal of data, would be to:

- Look at it in ways that uncover the potential ‘secrets’ within (such as in a histogram, in a time-series plot); and

- understand how to disaggregate the data, such that we can split it up into meaningful sub-groups. We can then:

- compare sub-groups to consider if and how they differ; and

- look at what’s happening within each sub-group (i.e. comparing apples with apples)

To close:

If you are involved in ‘data analysis’ for management, I don’t think your role should be about ‘providing the simple (often 1-page) picture that they’ve asked for’. I would expect that you would wish your profession to be along the lines of ‘how can I clearly show what’s happening and what this means?’

If you are a manager looking at measures: why would you want an (overly) simple picture so that you can review it quickly and then move on to making decisions? Wouldn’t you rather understand what is happening and why … so that good decisions can be made?

Footnotes

1. Measurement of things – a caution: We should be careful not to fall into the trap of thinking that everything is measurable or, if we aren’t measuring it, then it doesn’t matter.

There’s plenty of stuff that we know is really important even though we might not be measuring it.

2. Variation: If you’d like to understand this point, then please read some of my earlier posts, such as ‘The Spice of Life’ and ‘Falling into that trap’

As a simple example: If you took a regular reading of your resting heart rate, don’t you think it would be weird if you got, say, 67 beats per minute every single time? You’d think that you’d turned into some sort of android!

3. Expect/ can explain – clarification: this is NOT the same as ‘what we would like it to be’.

4. Arbitrary: When a numeric target is set, it is arbitrary as to which number was picked. Sure, it might have been picked with reference to something (such as 10% better than average, or the highest we’ve ever achieved, or….) but it’s arbitrary as to which ‘reference’ you choose.

5. Wrong questions: These wrong questions are then likely to cause us to jump to wrong conclusions and actions (also known as tampering). Such actions are likely to focus on individuals, rather than the system that they work within.

6. ‘Trigger’: The writing of this post was ‘triggered’ the other day when I reviewed a table of traffic-lighted (i.e. against a target) measures of targets on measures of things.

This is a bit of a ‘back to basics’ post, inspired by refreshing my memory from reading a superb book. It’s long…but hopefully interesting 🙂

This is a bit of a ‘back to basics’ post, inspired by refreshing my memory from reading a superb book. It’s long…but hopefully interesting 🙂 I’ll start with clarifying the difference between the customer and the process. In the words of Donald Wheeler:

I’ll start with clarifying the difference between the customer and the process. In the words of Donald Wheeler: “A natural consequence of this specification [target] approach…is the suddenness with which you can change from a state of bliss to a state of torment. As long as you are ‘doing okay’ there is no reason to worry, so sit back, relax, and let things take care of themselves. However, when you are in trouble, ‘don’t just stand there – do something!’ …This ‘on-again, off again’ approach is completely antithetical to continual improvement.” (Wheeler)

“A natural consequence of this specification [target] approach…is the suddenness with which you can change from a state of bliss to a state of torment. As long as you are ‘doing okay’ there is no reason to worry, so sit back, relax, and let things take care of themselves. However, when you are in trouble, ‘don’t just stand there – do something!’ …This ‘on-again, off again’ approach is completely antithetical to continual improvement.” (Wheeler) “When people are pressured to meet a target value, there are three ways they can proceed:

“When people are pressured to meet a target value, there are three ways they can proceed: Why? Well, because it removes us from the contextual data, stripping out the necessary understanding of variation within and thus further hiding the ‘voice of the process’.

Why? Well, because it removes us from the contextual data, stripping out the necessary understanding of variation within and thus further hiding the ‘voice of the process’.

At its very simplest, Bob’s measures need to help her (and her people) understand and improve the system.

At its very simplest, Bob’s measures need to help her (and her people) understand and improve the system. Why do we need to see a set of measures together? Simon Guilfoyle uses

Why do we need to see a set of measures together? Simon Guilfoyle uses  If I am responsible for a process (a system) then I want to:

If I am responsible for a process (a system) then I want to: