I’ve been re-reading a book on leadership by Elliott Jaques1 and, whilst I’m not smitten with where he took his ‘Requisite Organisation’ ideas2, I respect his original thinking and really like what he had to say about the notion of leadership. I thought I’d try and set this out in a post…but before I get into any of his work:

I’ve been re-reading a book on leadership by Elliott Jaques1 and, whilst I’m not smitten with where he took his ‘Requisite Organisation’ ideas2, I respect his original thinking and really like what he had to say about the notion of leadership. I thought I’d try and set this out in a post…but before I get into any of his work:

“When I grow up I want to be a leader!“

Over the years I’ve spoken with graduate recruits/ management trainees in large organisations about their aspirations, and I often hear that ‘when they grow up’ they ‘want to lead people’.

And I think “Really? Lead who? Where? Why?”

Why is it that (many) people think that ‘to lead’ is the goal? Perhaps it is because ‘modern management’ has rammed the ‘being labelled a leader IS success’ idea down our throats.

It seems strange to me that people feel the need ‘to lead’ per se. For me, whether I would want to lead (or not) absolutely depends…on things like:

- Is there a set of people (whether large or small) that needs leading?

- If they don’t, then I shouldn’t be attempting to force myself upon them.

- Am I passionate about the thing that ‘we’ want to move towards? (the purpose)

- If not, then I’m going to find it rather hard to genuinely inspire people to follow. I would be faking it.

- Do I (really) care about those that need leading?

- If I don’t, then this is likely to become obvious through my words and deeds

- ‘really’ caring means constantly putting myself in their shoes – to understand them – and acting on what I find.

- Do I think I have the means to lead in this scenario? (e.g. the necessary cognitive capacity/ knowledge/ skills/ experience)

- If someone else in the group (or close by) is better placed to lead in this scenario (for all the reasons above), then I should welcome this, and even seek them out3 – and not ‘fight them for it’.

I think we need to move on from the simplistic ‘I’m a leader!’ paradigm.

So, turning to what Jaques had to say…

Defining leadership

Jaques noted that “the concept of leadership is rarely defined with any precision”. He wrote that:

“Good leadership is one of the most valued of all human activities. To be known as a good leader is a great accolade… It signifies the talent to bring people together…to work effectively together to meet a common goal, to co-operate with each other, to rely upon each other, to trust each other.”

I’d ask you to pause here, and have a think about that phrase “to be known as a good leader…”

How many ‘good leaders’ have you seen?

I’d suggest that, given the number of people we come across in (what have been labelled as) ‘leadership’ positions, it is rare for us to mentally award the ‘good leader’ moniker.

We don’t give out such badges easily – we are rather discerning.

Why? Because being well led really matters to us. It has a huge impact upon our lives.

The ‘personality’ obsession

We (humans) seem to have spent much time over the last few decades trying to create a list of the key personality characteristics that are said to determine a good leader.

We (humans) seem to have spent much time over the last few decades trying to create a list of the key personality characteristics that are said to determine a good leader.

There have been two methods used to create such lists, which Jaques explains as follows:

“Most of the descriptions of leadership have focused on superiority or shortcomings in personal qualities in people and their behaviour. Thus, much has been written:

- about surveys that describe what executives do who are said to be good at leadership; or

- about the lives of well-known individuals who had reputations as ‘good leaders’ as though somehow emulating such people can help.”

Jaques believed that ‘modern management’ places far too much4 emphasis on personality make-up.

If you google ‘the characteristics of a good leader’ you will be bombarded with list upon list of what a leader should supposedly look like – with many claiming legitimacy from ‘academic exercises’ that sought out a set of people who appear to have ‘done well’ and then collecting a myriad of attributes about them, and searching for commonality (perhaps even using some nice statistics) …and, voila, that’s ‘a leader’ right there!

If you are an organisation desiring ‘leaders’, then all you then need do is find people like this. Perhaps, in time, you could pluck ‘a leader’ off a supermarket shelf.

If you ‘want to be a leader’, then all you need do is imitate the list of characteristics. After all, don’t you just ‘fake it to make it’ nowadays?

Mmm, if only leadership were so simple.

In reality, there are a huge range of personalities that will be able to successful lead and, conversely, there will be circumstances where someone with (supposedly) the most amazing ‘leadership’ personality fit won’t succeed5. This will come back to leading the ‘who’, to ‘where’ and ‘why’.

Further, some of those ‘what makes a good leader’ lists contain some very opaque ‘characteristics’…such as that you must be ‘enthusiastic’, ‘confident’, ‘purposeful’, ‘passionate’ and ‘caring’. These are all outcomes (effects) from those earlier ‘it depends’ four questions (causes), not things that you can simply be!

Personally, I’ll be enthusiastic and purposeful about, say, reducing plastic waste in our environment but I won’t be enthusiastic and purposeful about manufacturing weapons! I suppose that Donald Trump and Kim Jong-un might be different.

Jaques wrote that:

“It is the current focus upon psychological characteristics and style that leads to the unfortunate attempts within companies to change the personalities of individuals or to maintaining procedures aimed at ‘getting a correct balance’ of personalities in working groups…

…our analysis and experience would suggest that such practises are at best likely to be counterproductive in the medium and long term…

…attempts to improve leadership by psychologically changing our ‘leaders’ serve mainly as placebos or band-aids which, however well-intentioned they may be, nevertheless obscure the grossly undermining effects of the widespread organisational shortcomings and destructive defects”

It really won’t matter what personality you (attempt to) adopt if you continue to preside over a system that:

- lives a false purpose; and

- attempts to:

- command through budgets, detailed implementation plans, targets and cascaded objectives; and

- control through rules, judgements and contingent rewards.

Conversely, if you help lead your organisation through meaningfully and sustainably changing the system, towards better meeting its (customer) purpose, then you will have achieved a great thing! And the people around you (employees, customers, suppliers… society) will be truly grateful – and hold you in high regard – even if they can’t list a set of ‘desirable’ traits that you displayed along the way.

Peter Senge, in his systems thinking book ‘The Fifth Discipline’ writes that:

“Most of the outstanding leaders I have had the privilege to know possess neither striking appearance nor forceful personality. What distinguishes them is the clarity and persuasiveness of their ideas, the depth of their commitment, and the extent of their openness to continually learning more.

They do not ‘have the answer’, but they seem to instil confidence in those around them that, together, ‘we can learn whatever we need to learn in order to achieve the results we truly desire’.”

To close the ‘personality’ point – Jaques believed that:

“The ability to exercise leadership is not some great ‘charismystery’ but is, rather, an ordinary quality to be found in Everyman and Everywoman so long as the essential conditions exist…

…Charisma is a quality relevant only to cult leadership”.

We should stop the simplistic labeling of “this one here is a leader, and that one over there is not”.

Manager? Leader? Or are we confusing the two?!

So, back to that ‘Manager or Leader’ debate.

So, back to that ‘Manager or Leader’ debate.

It feels to me that many an HR department hit upon the ‘leader’ word, say 10 years ago, and considering it as highly desirable, decided that it would be a good idea to do a ‘find and replace’ throughout all of their organisation’s lexicon. i.e. find wherever the word ‘Manager’ is used and replace (i.e. in their eyes ‘upgrade’) with the word ‘Leader’.

And so we got ‘Team Leaders’ instead of ‘Team Managers’ and ‘Senior Leadership’ instead of ‘Senior Management’….and on and on.

And this changed everything, and nothing.

Jaques explained that:

“Leadership is not a free-standing activity: it is one function, among many, that occurs in some but not all roles.”

“Part of the work of the role [of a manager] is the exercise of leadership, but it is not a ‘leadership role’ any more than it would be called a telephoning role because telephoning is also a part of the work required.”

Peter Senge writes that:

“we encode a broader message when we refer to such people as the leaders. That message is that the only people with power to bring about change are those at the top of the hierarchy, not those further down. This represents a profound and tragic confusion.”

And so to three important leadership concepts: Accountability, Authority and Responsibility:

Accountability

Put simply, the occupant of a role is accountable:

- for achieving what has been defined as requirements of the role; and

- to the person or persons who have established that role.

Jaques writes that “management without leadership accountability is lifeless…leadership accountability should automatically be an ordinary part of any managerial role.”

Such leadership isn’t bigger than, or instead of, management – it is just a necessary part within. As such, it doesn’t make sense to say that “he/she is a good manager, but not a good leader”.

Authority

Authority is that which enables someone to carry out the role that they are accountable for.

“In order to discharge accountability, a person in a role must have appropriate authority; that is to say, authority with respect to the use of materials or financial resources or with respect to other people making it reasonably possible to do what needs to be done.”

Jaques goes on to split authority into ‘authority vested’ and ‘authority earned’.

“Role-vested authority by itself, properly used, should be enough to produce a minimal satisfactory result, by means of [people] doing what they are role bound to do. What it cannot do is to release the full and enthusiastic co-operation of others…

…personally earned authority is needed if people are to go along with us, using their full competence in a really willing and enthusiastic way; it carries the difference between a just-good-enough result and an outstanding or even scintillating one.”

In short, managers have to (continually) earn the trust and respect of their people.

(You might like to revisit an earlier post that explained Scholtes’ excellent diagram on trust – People and Relationships).

Responsibility

Let’s suppose that you are at the scene of a traffic accident. If you are on your own you will likely take on the social responsibility of doing the best you can in the circumstances. If others are there (say there is a crowd), you will likely assess whether you have special knowledge that is not already present:

- is anyone attending to the injured? If not, what can you do?

- If first aid is underway, do (you believe that) you know more than they appear to? Can you be of assistance to what they are already doing?

- If the police are not yet there, what can you do to secure the safety of others, such as warning other traffic?

In such circumstances, nobody carries the authority to call you to account (unless you knowingly do something illegal).

Jaques explains this as the general leadership responsibility and does so to:

“show how deeply leadership notions are embedded in the most general issues of social conscience, social morality, and the general social good.”

“General leadership responsibility must apply even where a person’s role does not carry leadership accountability…[employees] must strive to carry leadership responsibility, even towards their managers, whenever they consider it to be for everyone’s good for them to do so.”

The understanding of the difference between leadership accountability and general leadership responsibility (for the good of society, or a sub-set within) makes clear that it is never a case of “I’m the leader and you’re not.”

Jaques went on to write that:

“The effective and sensible discharge of general leadership responsibility is one sign of a healthy collaborative organisation.”

…and finally, to react to a likely critique:

“You’re so naïve Steve!”

Many of you reading this post may think me naïve. You may reply that there are, and will always be, people out there who want to feel the power and ego (self-importance) of being labelled as ‘a leader’…and yet (regardless of their words) don’t actually care about the ‘who, where and why’ of leading. You might cite a large swathe of politicians and senior corporate executives as evidence.

Yep, I’d agree that there will be people out there like this who will ‘play the game’ and work their way into (supposed) ‘leadership’ positions…but I don’t believe that such “I’m a leader!” people are likely to make ‘good leaders’ (in the sense of what Jacques defines as leadership). Sure, they can play the ‘leader’ game, but what really counts is whether a system (such as an organisation, or a community) meaningfully and sustainably moves towards its true purpose, for the good of society.

Senge writes that:

“the term ‘leader’ is generally an assessment made by others. People who are truly leading seem rarely to think of themselves in that way. Their focus is invariably on what needs to be done, the larger system in which they are operating, and the people with whom they are creating – not on themselves as ‘leaders’. Indeed, if it is otherwise, this is probably a problem. For there is always the danger, especially for those [installed into] leadership positions, of becoming ‘heroes in their own minds’.”

In summary:

If there is a need, and a person really cares about the purpose and the people, and they have the means then they will likely lead well – regardless of their personality type whilst doing so.

Conversely, it doesn’t matter what ‘an amazing person’ someone might (appear to) be if the conditions for ‘leading’ aren’t there.

‘Winning’ at becoming ‘the leader’ shouldn’t be the goal.

Footnotes

1. Elliott Jaques (1917 – 2003) was a Canadian psychoanalyst and organizational psychologist.

2. Requisite Organisation: Jaques wrote a book called the Requisite Organisation, which puts many of his ideas together. Personally, I find the ideas interesting but ‘of a time’ and/or of a particular ‘hierarchical’ mindset.

3. Seeking out the person best placed to lead: This would be a sign that you cared more about the purpose and the people than leading.

4. Regarding ‘far too much emphasis on personality’: Notice that Jaques says ‘too much’ but he doesn’t say that personality is irrelevant. But, rather than come up with what qualities ‘we’ should have, he turns it the other way around. A managerial leader should have:

“The absence of abnormal temperamental or emotional characteristics that disrupt the ability to work with others.”

This is nice. It presumes that, so long as we aren’t ‘abnormal’ then any of us can lead given the necessary conditions.

5. Winston Churchill is often used as an example of a great leader – and he was, under certain circumstances…but many historians have written about how this didn’t carry through to every situation (such as running a country in peacetime).

This post is a promised follow up to the recent

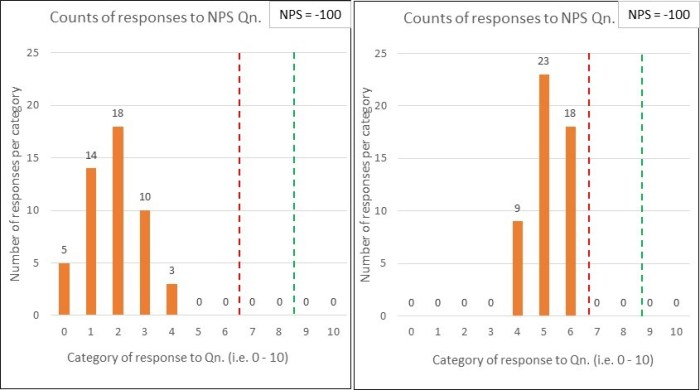

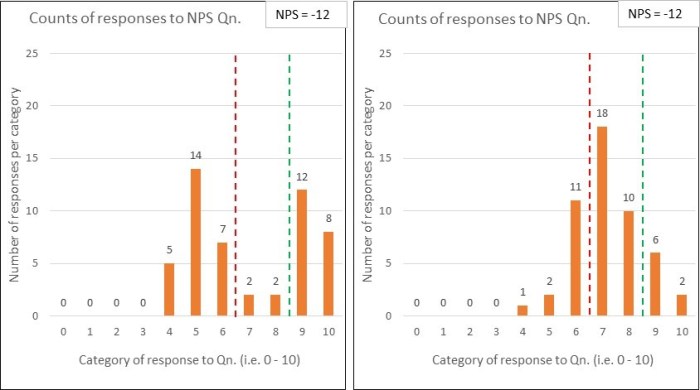

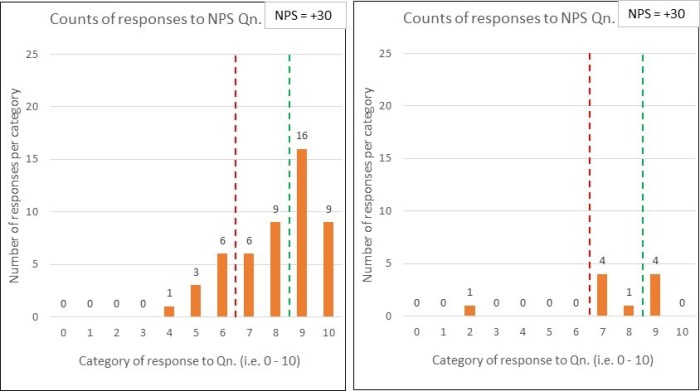

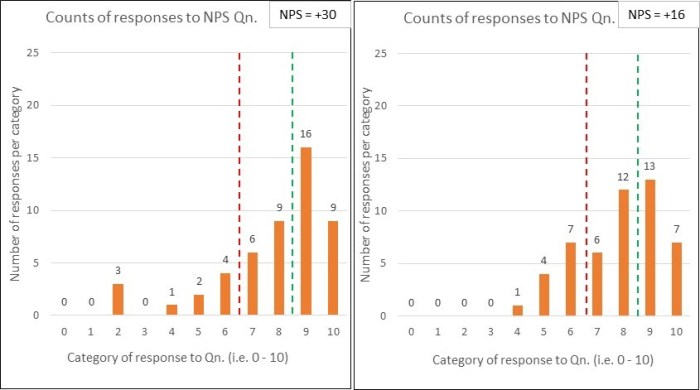

This post is a promised follow up to the recent A respondent scoring a 9 or 10 is labelled as a ‘Promoter’;

A respondent scoring a 9 or 10 is labelled as a ‘Promoter’;

Have you heard people telling you their NPS number? (perhaps with their chests puffed out…or maybe somewhat quietly – depending on the score). Further, have they been telling you that they must do all they can to retain or increase it?1

Have you heard people telling you their NPS number? (perhaps with their chests puffed out…or maybe somewhat quietly – depending on the score). Further, have they been telling you that they must do all they can to retain or increase it?1 You then work out the % of your total respondents that are Promoters and Detractors, and subtract one from the other.

You then work out the % of your total respondents that are Promoters and Detractors, and subtract one from the other. I’ve been re-reading a book on leadership by Elliott Jaques1 and, whilst I’m not smitten with where he took his ‘Requisite Organisation’ ideas2, I respect his original thinking and really like what he had to say about the notion of leadership. I thought I’d try and set this out in a post…but before I get into any of his work:

I’ve been re-reading a book on leadership by Elliott Jaques1 and, whilst I’m not smitten with where he took his ‘Requisite Organisation’ ideas2, I respect his original thinking and really like what he had to say about the notion of leadership. I thought I’d try and set this out in a post…but before I get into any of his work: We (humans) seem to have spent much time over the last few decades trying to create a list of the key personality characteristics that are said to determine a good leader.

We (humans) seem to have spent much time over the last few decades trying to create a list of the key personality characteristics that are said to determine a good leader. So, back to that

So, back to that  There’s a lovely idea which I’ve known about for some time but which I haven’t yet written about.

There’s a lovely idea which I’ve known about for some time but which I haven’t yet written about.

To seek: search for, attempt to find something.

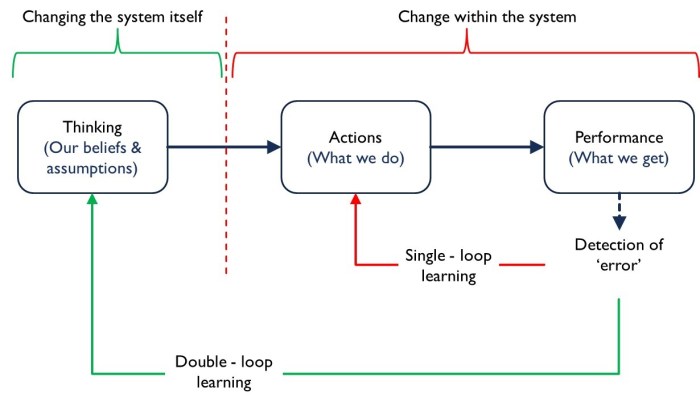

To seek: search for, attempt to find something. “Management thinking affects business performance just as an engine affects the performance of an aircraft. Internal combustion and jet propulsion are two technologies for converting fuel into power to drive an aircraft.

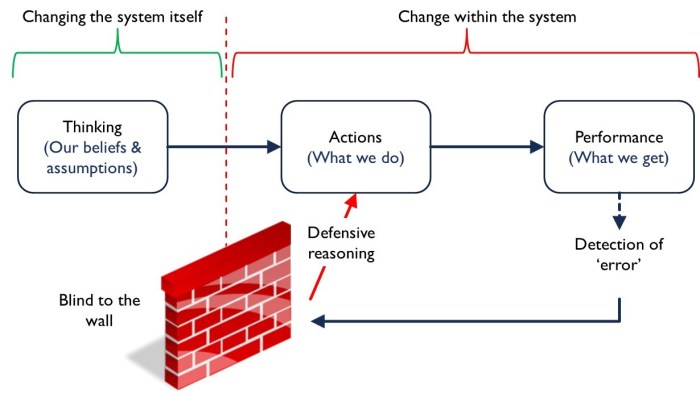

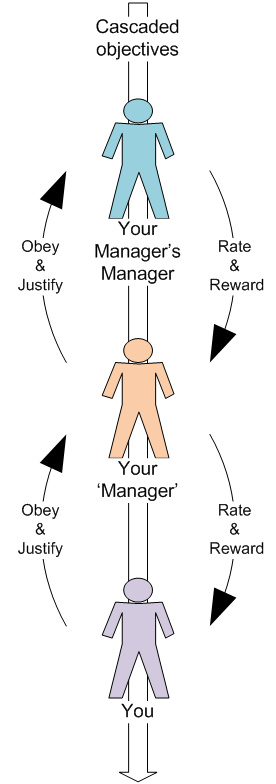

“Management thinking affects business performance just as an engine affects the performance of an aircraft. Internal combustion and jet propulsion are two technologies for converting fuel into power to drive an aircraft.  You will tell your manager what you think he/she wants to hear, and provide

You will tell your manager what you think he/she wants to hear, and provide  Governments all over the world want to get the most out of the money they spend on public services – for the benefit of the citizens requiring the services, and the taxpayers footing the bill.

Governments all over the world want to get the most out of the money they spend on public services – for the benefit of the citizens requiring the services, and the taxpayers footing the bill. He takes us through a case study of a real person in need, and their interactions with multiple organisations (many ‘front doors’) and how the traditional way of thinking seriously fails them and, as an aside, costs the full system a fortune.

He takes us through a case study of a real person in need, and their interactions with multiple organisations (many ‘front doors’) and how the traditional way of thinking seriously fails them and, as an aside, costs the full system a fortune. He identifies three survival principles in play, and the resulting anti-systemic controls that result:

He identifies three survival principles in play, and the resulting anti-systemic controls that result: Cox makes the obvious point that the actual redesign can’t be explained up-front because, well…how can it be -you haven’t studied your system yet!

Cox makes the obvious point that the actual redesign can’t be explained up-front because, well…how can it be -you haven’t studied your system yet! [Once you’ve successfully redesigned the system] “The primary focus is on having really good citizen-focused measure: ’are you improving’, ‘are you getting better’, ‘is the demand that you’re placing reducing over time’.”

[Once you’ve successfully redesigned the system] “The primary focus is on having really good citizen-focused measure: ’are you improving’, ‘are you getting better’, ‘is the demand that you’re placing reducing over time’.” For those rugby fans among you – and virtually every New Zealander –

For those rugby fans among you – and virtually every New Zealander –  Sir Ian explained that he would sit down with his team of coaches (perhaps five people) and work through all the analysis and then discuss, often for hours deep into the night. He provided this wonderful insight:

Sir Ian explained that he would sit down with his team of coaches (perhaps five people) and work through all the analysis and then discuss, often for hours deep into the night. He provided this wonderful insight: When I was growing up, I remember my dad (a Physicist) telling me that it was pointless, and in fact meaningless, to be accurate with an estimate: if you’ve worked out a calculation using a number of assumptions, there’s no point in writing the answer to 3 decimal places! He would say that my ‘accurate’ answer would be wrong because it is misleading. The reader needs to know about the possible range of answers – i.e. about the uncertainty – so that they don’t run off thinking that it is exact.

When I was growing up, I remember my dad (a Physicist) telling me that it was pointless, and in fact meaningless, to be accurate with an estimate: if you’ve worked out a calculation using a number of assumptions, there’s no point in writing the answer to 3 decimal places! He would say that my ‘accurate’ answer would be wrong because it is misleading. The reader needs to know about the possible range of answers – i.e. about the uncertainty – so that they don’t run off thinking that it is exact. Let’s say that someone in senior management (we’ll call her Theresa) wants to carry out a major organisational change that (the salesman said) will change the world as we know it!

Let’s say that someone in senior management (we’ll call her Theresa) wants to carry out a major organisational change that (the salesman said) will change the world as we know it! Some (and perhaps all) of the tweaks might have logic to them…but for every assumption being made (supposedly) tighter:

Some (and perhaps all) of the tweaks might have logic to them…but for every assumption being made (supposedly) tighter: It’s not just financial models within business cases – it is ‘detailed up-front’ planning in general: the idea that we should create a highly detailed plan before making a decision (usually by hierarchical committee) as to whether to proceed on a major investment.

It’s not just financial models within business cases – it is ‘detailed up-front’ planning in general: the idea that we should create a highly detailed plan before making a decision (usually by hierarchical committee) as to whether to proceed on a major investment.